IA Cobotics Lab

Through our research in these diverse areas, we strive to unlock the true potential of cobotics, making it an integral part of our future in various industries and domains; Our research spans across several key areas, including:

- Autonomous cobots and automation

- Robotic vision and sensor fusion

- AI-powered intelligence for cobotics

- Strategies for Human-Robot collaboration

- Rapidly integrable solutions

- Soft robotics

- Healthcare collaborative robots

- Evaluate, deploy, manage, and monitor robots in various applications

Academic Research Team

A/Prof Ehsan Asadi

Team Leader, Robotics, IEEE Senior Member, A/Editor IEEE RA-L

Prof Alireza Bab-Hadiashar

Research Leader, Visual-AI, Intelligent Automation (IA) Group

A/Prof Hamid Khayyam

Deputy Leader, Modelling, Control, Complex Systems, AI, Energy

Prof Reza Hoseinnezhad

Multi-Sensor Fusion & Machine Vision

Dr Amirali K-Gostar

Multi-Sensor Fusion & Machine Vision

Dr Ruwan Tennakoon

AI and Machine Learning

HDR Research Team

Christian Milianti

HDR Candidate

Data-efficient Machine Learning for Robotic Perception and Manipulation

Thilina Tharanga Malagalage Don

HDR Candidate

Extended Robotic Reality for Robot Learning

Shanuka Dodampegama

HDR Candidate

Robotic Intelligence for Construction Waste Sorting

Subash Gautam

HDR Candidate

Vision Systems for Processes Control in Metal Additive Manufacturing

Shayan Azizi

HDR Candidate

Developing the Next Generation Materials Science Lab

Umair Naeem

HDR Candidate

RESEARCH advisers

Prof Ivan Cole

Additive Manufacturing

Prof Olga Troynikov

Human-Centered Automation

Prof Stuart Bateman

Aerospace & Advanced Manufacturing

Prof Mark Easton

Manufacturing & Materials

Research Projects

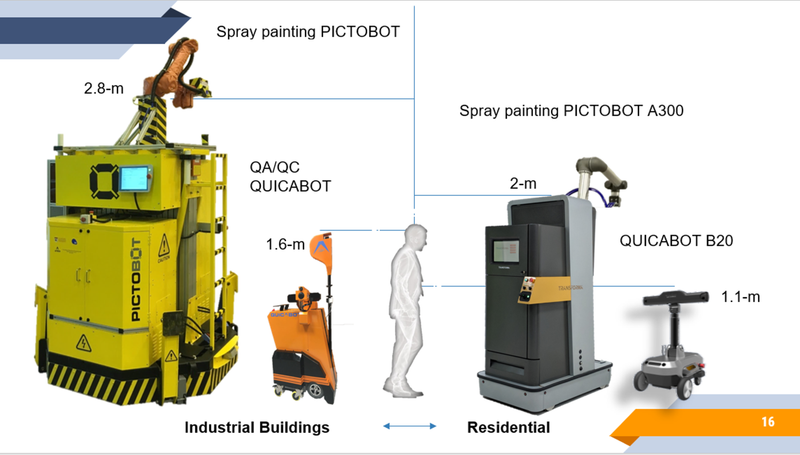

Intelligent Automation and Service Robots for Infrastructure and Construction

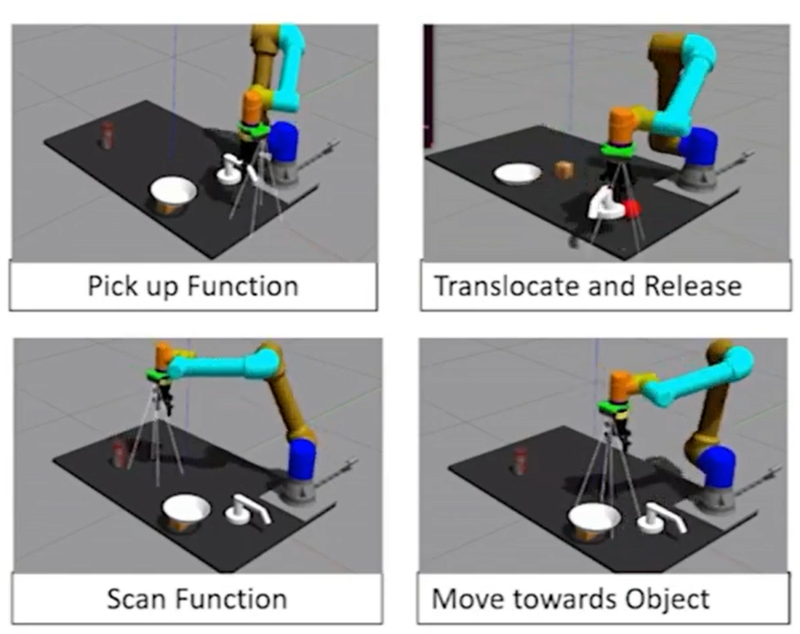

Deploying robots in collaboration with humans is seen as an enabler of major changes in construction productivity for various tasks, such as digital twain, quality/compliance inspection, progress monitoring and automated interior finishing.

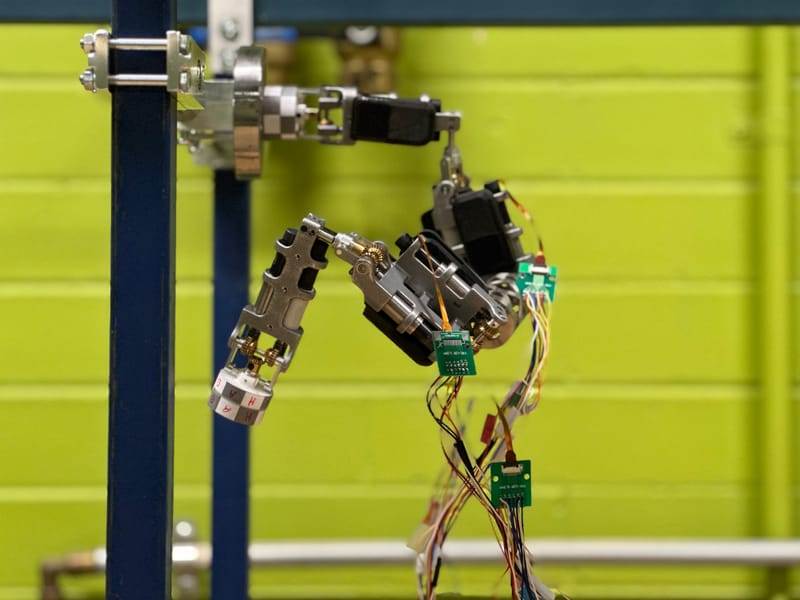

Robot Mechanisms and Systems Design

IEEE Transactions on Industrial Electronics, 2020 IROS 2020 Mechanism and Machine Theory Robotics and Computer-Integrated Manufacturing FToMM Symposium on Robot Design, Dynamics and Control

Extended Reality (XR) in Robotics

XR devices offer a range of spatial perception capabilities that can enhance human and robot collaboration. From 6DoF tracking to depth perception, gesture recognition, and environmental mapping, these features enable applications spanning human and robot interaction for manufacturing, healthcare, and beyond, XR devices continue to push the boundaries of spatial perception and redefine how we interact with robots

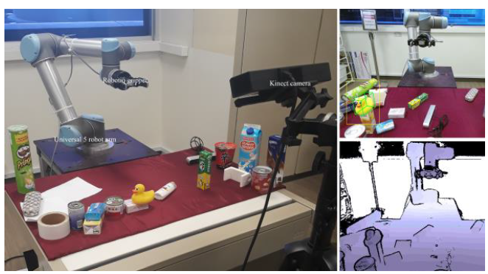

Robotic Vision and Perception

This is a generic article you can use for adding article content / subjects on your website.

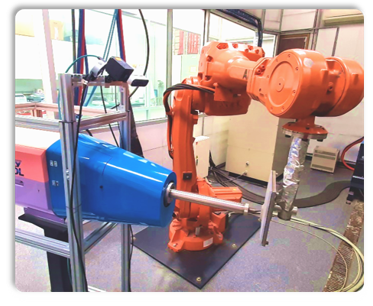

Robotics Additive Manufacturing

Robotic additive manufacturing is a technology that can deposit material and fabricate complex parts. Part geometry during the process can negatively affect the shape and size of the final manufactured object. In-process spatio-temporal 3D reconstruction, also known as 4D reconstruction, allows for early detection of deviations from the design in robotic additive manufacturing, thus providing the opportunity to rectify at an early stage, making the process more robust, efficient and productive.

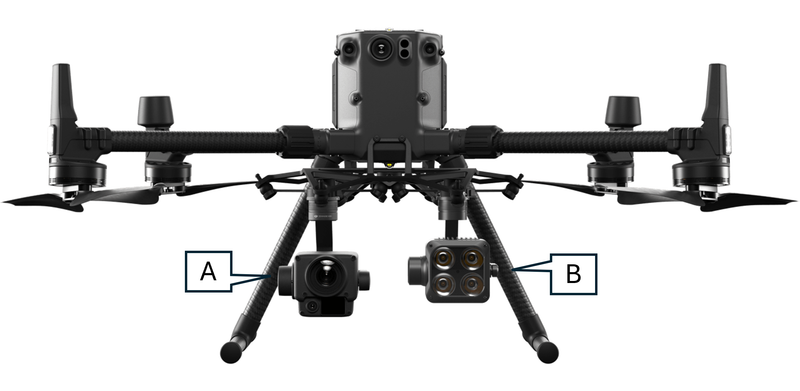

Aerial Imaging-Based Soiling Detection on Solar Photovoltaic Panels

Our study, conducted in Melbourne's suburban areas, highlights two main types of soiling on photovoltaic (PV) panels: dust and bird droppings. Dust accumulation results from natural wind and climate conditions, while bird droppings, which we specifically distinguished from debris like leaves or shadows, can significantly impact solar efficiency even in small amounts. The size and precise location of bird droppings are especially critical, as they can lead to "hotspots" that reduce power output and, over time, cause permanent damage to the panel. The cleaning methods differ as well; dust can be removed with low-pressure water jets, but bird droppings require specialized cleaning solutions for effective removal. Our dataset was carefully curated to reflect these distinct cleaning needs. The dataset reveals that soiling on PV panels appears in a ratio of approximately 1:2 for dust to bird droppings, which introduces a class imbalance. Bird droppings also present a unique challenge for detection—they are small, irregular in shape, and typically cover less than 2% of the panel’s surface area, in contrast to larger, more uniform dust patches. Our dataset includes Polycrystalline panels with a blue background, where bird droppings often appear as white or gray spots. This dataset provides an invaluable resource for developing advanced detection methods for soiling, contributing to optimized cleaning schedules and enhancing solar panel efficiency.

Robotic Challenges and Capstones

Advanced Robotics System Course

Post Graduate Students, Engagement with Cobots 2023

Contact

- 58 Cardigan St, Carlton Melbourne, Victoria, Australia